Scammers profit from Turkey-Syria earthquake

Scammers are using the earthquakes in Turkey and Syria to try to trick people into donating to fake causes, security experts have warned.

These scams claim to raise money for survivors, left without heat or water following the disasters that have killed more than 35,000 people.

But instead of helping those in need, scammers are channelling donations away from real charities, and into their own PayPal accounts and cryptocurrency wallets.

We’ve identified some of the main methods used by scammers, and tools you can use to double check before donating.

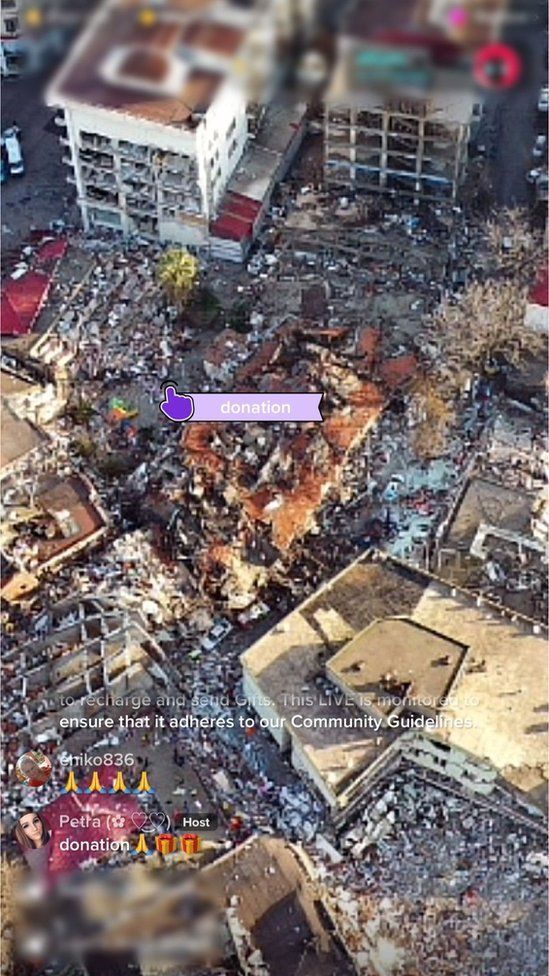

On TikTok Live, content creators can make money by receiving digital gifts. Now, TikTok accounts are posting photos of devastation, looped footage and recordings of TVs showing rescue efforts, whilst asking for donations.

Captions include phrases like “Let’s help Turkey”, “Pray for Turkey” and “Donate for earthquake victims”.

One account, which was live for over three hours, showed a pixelated aerial image of destroyed buildings, accompanied by sound effects of explosions. Off-camera, a male voice laughs and speaks in Chinese. The video’s caption is “Let’s help Turkey. Donation”.

Another video shows a picture of a distressed child running from an explosion. The livestream host’s message is “Please help achieve this goal”- an apparent plea for TikTok gifts.

But the photo of the child is not from last week’s earthquakes. A reverse image search found the same image had been posted on Twitter in 2018 with the caption “Stop Afrin Genocide”, referring to a city in north-western Syria where Turkish forces and their allies in the Syrian opposition ousted a Kurdish militia in that year.

Another word of caution about gifting on TikTok: a BBC investigation found TikTok takes up to 70% of the proceeds of digital gifts, although TikTok says it takes less than that.

A TikTok spokesperson told the BBC: “We are deeply saddened by the devastating earthquakes in Turkey and Syria and are contributing to aid earthquake relief efforts.

“We’re also actively working to prevent people from scamming and misleading community members who want to help.”

On Twitter, people are sharing emotive images alongside links to cryptocurrency wallets asking for donations.

One account posted the same appeal eight times in 12 hours, with an image of a firefighter holding a small child amid collapsed buildings.

The picture used, however, is not real. Greek newspaper OEMA reports that it was created by the Major General of the Aegean fire brigade Panagiotis Kotridis using Artificial Intelligence software Midjourney.

AI image generators often make mistakes, and Twitter users were quick to spot that this firefighter has six digits on his right hand. To verify this further, we asked colleagues from the BBC’s tech research hub the Blue Room (part of BBC Research & Development) to try to generate similar images using the same software.

They asked the software for an “image of firefighter in aftermath of an earthquake rescuing young child and wearing helmet with Greek flag”, and were given these options:

Furthermore, one of the crypto wallet addresses had been used in scam and spam tweets from 2018. The other address had been posted on Russian social media website VK alongside pornographic content.

When the BBC contacted the person tweeting the appeal, they denied it was a scam. They said they had poor connectivity, but answered our questions on Twitter using Google Translate.

“My aim is to be able to help people affected by the earthquake if I manage to raise funds”, they said. “Now people are cold in the disaster area, and especially babies do not have food. I can prove this process with receipts.”

However, they have not yet sent us receipts or proof of their identity.

Source: BBC